Responsible AI

Responsible AI represents a critical research area focused on ensuring AI systems are developed ethically. At Pinterest, our team works in the gray area beyond policy enforcement, identifying opportunities where ML expertise ensures Pinterest remains positive, inclusive, and welcoming. Rooted in solving real user problems, we integrate responsible AI practices throughout the ML lifecycle. We focus on three major areas: Inclusive AI, ML Fairness, and Responsible GenAI Systems.

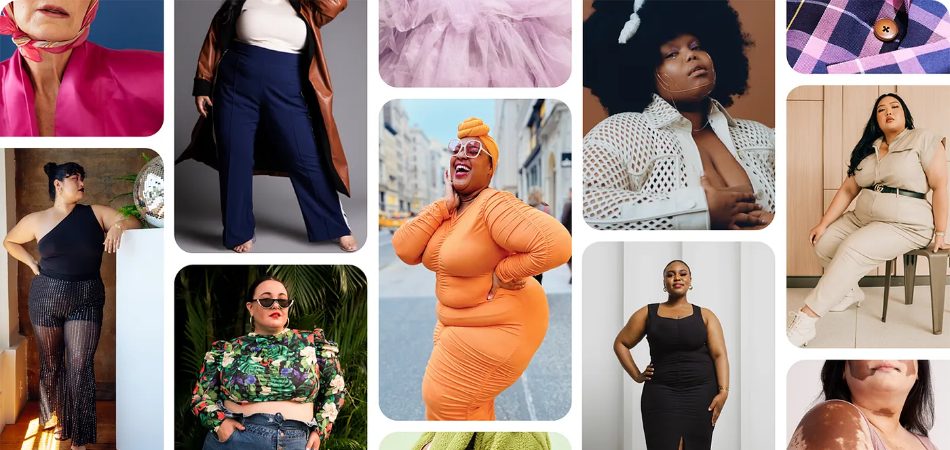

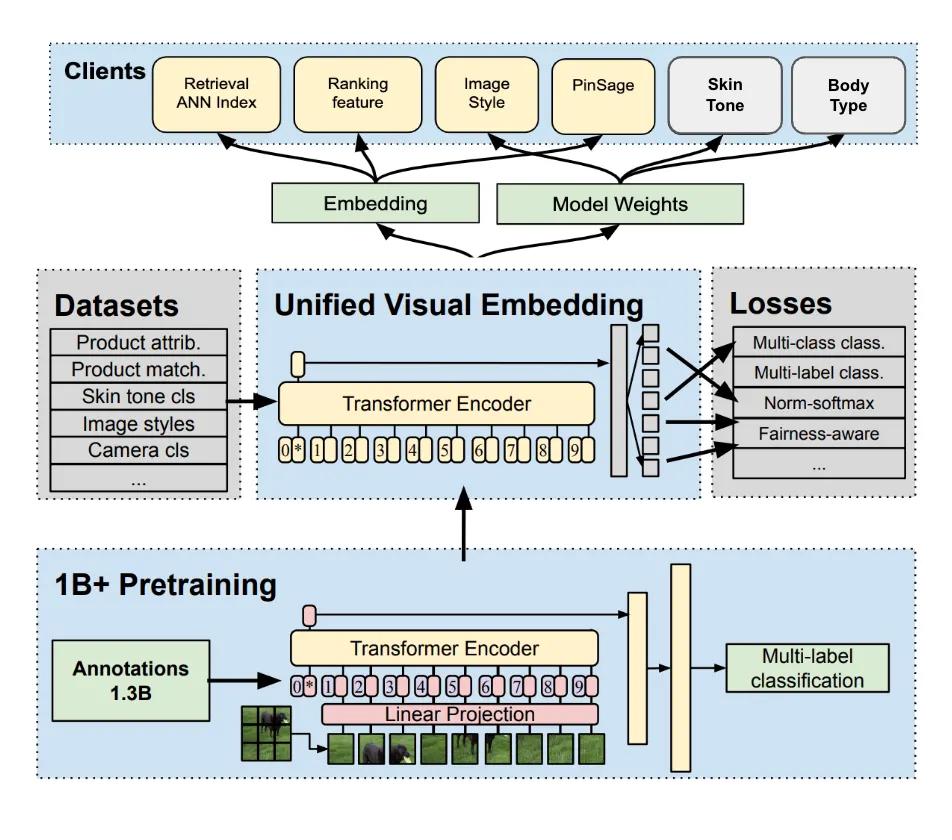

Our Inclusive AI efforts focus on improving content representation through innovative ML solutions across Pinterest's many Recommender Systems. We developed the models behind Pinterest's skin tone, hair pattern, and body type search refinements, which help users refine their searches to find content that is more relevant to them. These same models also surface underrepresented content to diversify recommendations so users can see themselves represented by default. These advances required building novel annotation pipelines, fairness-aware ranking stacks, and comprehensive evaluation frameworks. Recently, we've expanded into accessibility initiatives, leveraging our ML and domain expertise while partnering with experts to improve some of our accessibility features.

We've pioneered internal tooling and frameworks that enable engineering teams to systematically assess models for unintended bias. We work in collaboration with partner teams across Pinterest to evaluate our ML systems for fairness. Our framework supports segmented analysis with tooling that visualizes fairness metrics alongside performance indicators. We've built monitoring systems that help teams maintain fairness benchmarks in production, embedding these considerations naturally into the ML development process through our domain expertise.

Our Responsible GenAI Systems work leverages our ML expertise to address the unique challenges of deploying safe and responsible generative AI at scale. We've spearheaded Pinterest's comprehensive framework for Responsible GenAI product development, including evaluation methodologies, red teaming approaches, and mitigation strategies. This includes our Empathetic AI framework as a core evaluation and governance methodology, along with several specialized models we've developed that are now used within that system for assessing generated content quality and appropriateness, incorporating many Trust and Safety and Content Quality models as well. We collaborate both with other teams within ATG working on foundation model alignment and development to prevent problems from the beginning, and with product teams through our GenAI review process to ensure consistent standards across all generative features.